IDR

Layanan Customer

Layanan Product

Permainan Terlengkap dalam seluruh platform

Lisensi Game

lisensi Resmi & Aman oleh PAGCOR

Layanan Member

Tambah Dana

Waktu

Menit

WITHDRAW

Waktu

Menit

Sistem Pembayaran

ONLINE

ONLINE

OFFLINE

ONLINE

ONLINE

ONLINE

ONLINE

ONLINE

ONLINE

ONLINE

ONLINE

ONLINE

ONLINE

ONLINE

HOKI1881 | Permainan Online 24 jam penuh Terlengkap

HOKI 1881 Game Online dengan game online terlengkap dan terpercaya hanya di HOKI1881

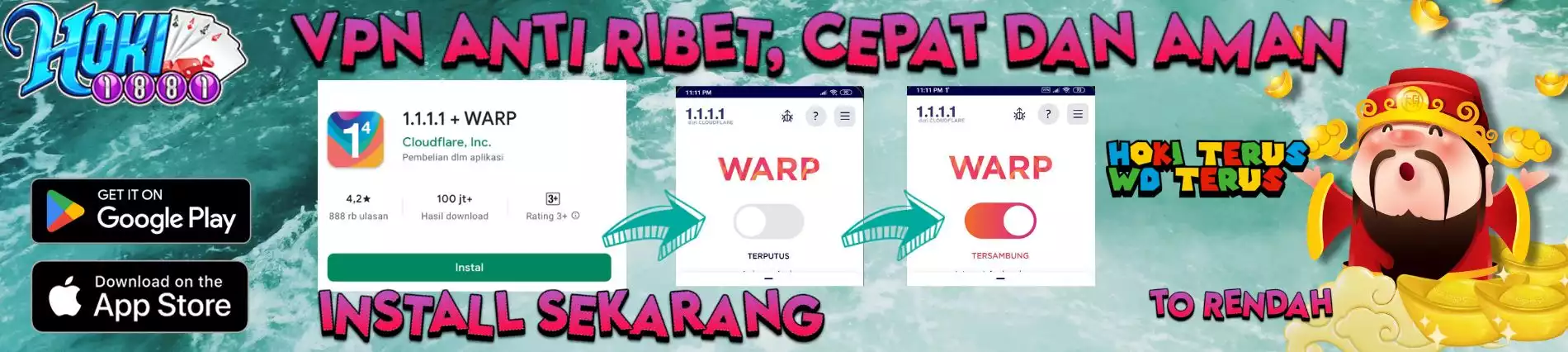

Mainkan game online kesukaan anda dan raih keberuntungan dan kemenangan terbesar di HOKI1881 , situs game online terlengkap dan terpercaya yang bisa anda mainkan dengan 1 ID di Gadget IOS, Android dan Website.

Di HOKI 1881 Keamanan dan kepuasan anda adalah prioritas utama kami. Dengan teknologi enkripsi , Anda dapat bermain di HOKI 1881 dengan nyaman, mengetahui bahwa informasi pribadi Anda selalu aman bersama kami. Tim layanan pelanggan kami selalu siap membantu Anda dengan segala pertanyaan atau masalah yang mungkin Anda miliki, 24 jam sehari, 7 hari seminggu.